In this post we're going to be comparing two popular Rust libraries used for building web applications. We'll be writing an example in each and compare their ergonomics and how they perform.

The first library Hyper is a low level HTTP library which contains the primitives for building server applications. The second library Rocket comes with more "batteries included" and provides a more declarative approach to building web applications.

The Demo

We're going to build a simple site to showcase how each libraries implements:

Routing

Routing decides what to respond for a given URL. Some paths are fixed, in our example we will have a fixed route / which returns Hello World. Some paths are dynamic and can have parameters. In the example we will have /hello/*name* which will response Hello *name* which will have name substituted in each response.

Shared state

We want to have a central state for the application.

In this demo we will have central site visitor counter which counts the number of requests. This number can be viewed as JSON on the /counter.json route. In this example we will be storing the counter in application memory. However if you were storing it in a database the shared state would be a database client.

The are lots of other functionality necessary for a site such as handling HTTP methods, receiving data, rendering templates and error handling. But for the scope of this post and example we will only be comparing these two features.

The rules

The rules for this demonstration is to only use the specific library and any of its re-exported dependencies. So no additional libraries (except in the hyper example we need a tokio::main).

Hyper

Hyper's readme describes hyper as a "A fast and correct HTTP implementation for Rust with client and server APIs". For this demo we will be using the server side of the library. It has 9.7k stars on GitHub and 48M crates downloads. It is used as a often a dependency and many other libraries such as reqwest and tonic build on top of it.

In this example we see how far we can get with just using the library. This demo uses Hyper 0.141. Below is the full code for the site:

use hyper::server::conn::AddrStream;

use hyper::service::{make_service_fn, service_fn};

use hyper::{Body, Request, Response, Server};

use std::convert::Infallible;

use std::sync::{atomic::AtomicUsize, Arc};

#[derive(Clone)]

struct AppContext {

pub counter: Arc<AtomicUsize>,

}

async fn handle(context: AppContext, req: Request<Body>) -> Result<Response<Body>, Infallible> {

// Increment the visit count

let new_count = context

.counter

.fetch_add(1, std::sync::atomic::Ordering::SeqCst);

if req.method().as_str() != "GET" {

return Ok(Response::builder().status(406).body(Body::empty()).unwrap());

}

let path = req.uri().path();

let response = if path == "/" {

Response::new(Body::from("Hello World"))

} else if path == "/counter.json" {

let data = format!("{{\"counter\":{}}}", new_count);

Response::builder()

.header("Content-Type", "application/json")

.body(Body::from(data))

.unwrap()

} else if let Some(name) = path.strip_prefix("/hello/") {

Response::new(Body::from(format!("Hello, {}!", name)))

} else {

Response::builder().status(404).body(Body::empty()).unwrap()

};

Ok(response)

}

#[tokio::main]

async fn main() {

let context = AppContext {

counter: Arc::new(AtomicUsize::new(0)),

};

let make_service = make_service_fn(move |_conn: &AddrStream| {

let context = context.clone();

let service = service_fn(move |req| handle(context.clone(), req));

async move { Ok::<_, Infallible>(service) }

});

let server = Server::bind(&"127.0.0.1:3000".parse().unwrap())

.serve(make_service)

.await;

if let Err(e) = server {

eprintln!("server error: {}", e);

}

}

At the top we define a handle function which processes all the requests.

Routing is done through the chain of ifs and elses in the handle function. First the path of the request (e.g / for the index) is extracted using req.uri().path(). Fixed routes are easy to branch on using string comparison like path == "/". For routes which match multiple paths such as the /hello/<user> route it uses str::strip_prefix which returns a None if the path doesn't

start with the prefix or Some if the path starts with the prefix along with a slice that proceeds the prefix.

"/".strip_prefix("/hello/") == None

"/test".strip_prefix("/hello/") == None

"/hello/jack".strip_prefix("/hello/") == Some("jack")

The function has a early return for requests with a method other than GET because there are no POST routes or others for this example. If the site accepted different requests types and had to add additional guards then we could additional clauses to the if statement. Although you could see how expand on the if chain would get more complex and verbose.

To return a response, Hyper re-exports Response (from the http crate). It has a nice simple builder pattern for building the responses. The serialization code is hand written using format!. Of course we could import serde but that's against the rules.

The counter is done by creating a struct in the initializing code and cloning it on every request to send to the handler function. Without going into the details it uses Arc<AtomicUsize> instead of a usize as the atomic variant has special properties for when multiple handlers are using and mutating it. The code increments the visitor counter before anything else in the handler function so that a visit is recorded for all requests.

Hyper Verdict

In terms of development (on a low end machine we used for profiling2), a debug build (without any of the build artifacts) takes 79.0s. After the initial compilation, incremental compilation takes only 1.9s. For building a release build with further optimizations (on top of the debug build artifacts) it takes 32.5s.

The initialization code was take from Hyper's server docs and is quite verbose and out of the box for Hyper there are no logs or server information.

In terms of runtime performance over three 30 second connections Hyper responded to on average 74,563 requests per second on the index route on the above code. Which is incredible quick!

Rocket

Rocket is a "web framework for Rust with a focus on ease-of-use, expressibility, and speed". It has 17.4k github stars and 1.7M crates downloads. Rocket internally uses Hyper.

For this demo we are using the 0.5.0-rc2 version of Rocket3 which builds on Rust stable.

use rocket::{

fairing::{Fairing, Info, Kind},

get, launch, routes,

serde::{json::Json, Serialize},

Config, Data, Request, State,

};

use std::sync::atomic::AtomicUsize;

#[derive(Serialize, Default)]

#[serde(crate = "rocket::serde")]

struct AppContext {

pub counter: AtomicUsize,

}

#[launch]

fn rocket() -> _ {

let config = Config {

port: 3000,

..Config::debug_default()

};

rocket::custom(&config)

.attach(CounterFairing)

.manage(AppContext::default())

.mount("/", routes![hello1, hello2, counter])

}

struct CounterFairing;

#[rocket::async_trait]

impl Fairing for CounterFairing {

fn info(&self) -> Info {

Info {

name: "Request Counter",

kind: Kind::Request,

}

}

async fn on_request(&self, request: &mut Request<'_>, _: &mut Data<'_>) {

request

.rocket()

.state::<AppContext>()

.unwrap()

.counter

.fetch_add(1, std::sync::atomic::Ordering::SeqCst);

}

}

#[get("/")]

fn hello1() -> &'static str {

"Hello World"

}

#[get("/hello/<name>")]

fn hello2(name: &str) -> String {

format!("Hello, {}!", name)

}

#[get("/counter.json")]

fn counter(state: &State<AppContext>) -> Json<&AppContext> {

Json(state.inner())

}

In Rocket we describe each endpoint using a function. The get macro attribute handles path routing and http method constraint. No need to add early returns for methods and dealing with raw string slices. It takes the declarative approach, #[get("/hello/<name>")] is more descriptive and less verbose than if let Some(name) = path.strip_prefix("/hello/"). The functions are registered using .mount("/", routes![hello1, hello2, counter]).

The application has a state defined here:

#[derive(Serialize, Default)]

#[serde(crate = "rocket::serde")]

struct AppContext {

pub counter: AtomicUsize,

}

And it is created and registered using .manage(AppContext::default()). Rocket re-exports the serialization library serde so we can use #[derive(Serialize)] to generate serialization logic for the counter state, so no hand writing the serialization code unlike first method.

In Rocket endpoint functions can just return String or str slices and Rocket handles it automatically. Rocket also comes with a Json return type and reusing the fact that AppContext implements Serialize we can freely build a Json response from it. The Json structure handles setting the Content-Type header automatically for us.

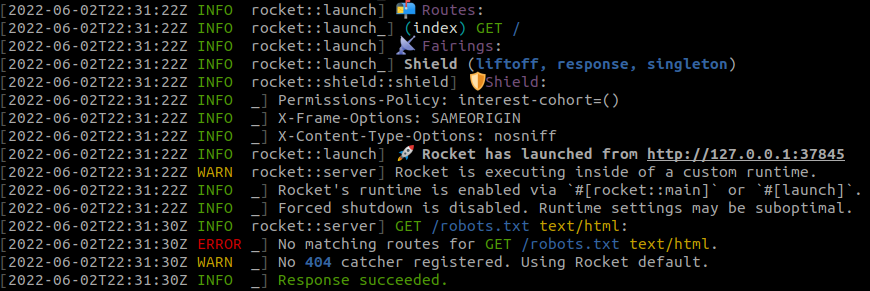

Rocket has a middleware implementation which it calls "fairings". In the example it defines a CounterFairing which on every request modifies the counter state. The initialization code is really slim, it sets up a config and a Rocket structure is created using a builder pattern. Annotating the main function with #[launch] helps Rocket find the entry point and abstracts how the server is span up. Rocket also has really nice built in logs which are great for development.

Rocket Verdict

Since Rocket has more dependencies and requires more macro expansion it takes a bit longer build taking 141.9s (2m 21.9s) on a cold start to compile. A release builds on top of debug artefact takes 147.0s (2m 27.0s) to compile. Incremental builds are still fast taking 3.3s to compile after a small change to the response of a endpoint.

Using the same benchmark as Hyper, on average Rocket returned 43,899 requests per second in a release build with the logging disabled - roughly 60% of Hyper's throughput.

Conclusion

Writing both of these examples were fun to build and there weren't any frustrations or problems using them. Both are plenty fast for performance to be a concern.

Rockets documentation is very good and explanatory. All of Hyper's api is well documented on its docs.rs page. Both libraries are actively developed with many commits and pull requests made in the last month.

Do you prefer the control and speed of Hyper or prefer the expressiveness of Rocket?

Shuttle: Stateful Serverless for Rust

Deploying and managing your Rust web apps can be an expensive, anxious and time consuming process.

If you want a batteries included and ops-free experience, try out Shuttle.

Footnotes

-

The dependencies for building the project

hyper = { version = "0.14", features = ["server", "tcp", "http1"] }andtokio = { version = "1.18.2", features = ["rt", "macros", "rt-multi-thread"] }↩ -

The build and request profile machine is a vm with 2 cores and 7 GB RAM. Take the numbers with a grain of salt ↩

-

The dependencies for building the project

rocket = { version = "0.5.0-rc.2", features = ["json"] }↩