Introduction

Have you ever thought about making your own database of potential businesses for lead generation or product price data so you can get your products at the cheapest price without any effort? Web scraping is what lets you do that without having to do any of the manual work yourself. Rust makes this easier by allowing you to handle errors explicitly and run tasks concurrently, letting you do things like attaching a web service router to your scraper or a Discord bot that outputs the data.

In this guide to Rust web scraping, we will write a Rust web scraper that will scrape Amazon for Raspberry Pi products and get their prices, then store them in a PostgresQL database for further processing.

Stuck or want to see the final code? The Github repository for this article can be found here.

Getting Started

Let's make a new project by using cargo shuttle init. For this project we'll simply call it webscraper - you'll want the none option for the framework, which will spawn a new Cargo project with shuttle-runtime added (as we aren't currently using a web framework, we don't need to pick any of the other options).

Let's install our dependencies with the following one liner:

cargo add chrono reqwest scraper tracing shuttle-shared-db sqlx --features shuttle-shared-db/postgres,sqlx/runtime-tokio-native-tls,sqlx/postgres

We'll also want to install sqlx-cli, which is a useful tool for managing our SQL migrations. We can install it by running the following:

cargo install sqlx-cli

If we then use sqlx migrate add schema in our project folder, we'll then get our SQL migration file, which can be found in the migrations folder! The file will be formatted with the date and time at which the migration was created, and then the name we gave it (in this case, schema). For our purposes, here are the migrations we'll be using:

-- migrations/schema.sql

CREATE TABLE IF NOT EXISTS products (

id SERIAL PRIMARY KEY,

name VARCHAR NOT NULL,

price VARCHAR NOT NULL,

old_price VARCHAR,

link VARCHAR,

scraped_at DATE NOT NULL DEFAULT CURRENT_DATE

);

Before we get started, we'll want to make a struct that implements shuttle_runtime::Service, which is an async trait. We'll also want to set our user agent so that there is less chance of us getting blocked. Thankfully, we can do all of this by returning a struct in our main function, like so:

// src/main.rs

use reqwest::Client;

use tracing::error;

use sqlx::PgPool;

struct CustomService {

ctx: Client,

db: PgPool

}

// Set up our user agent

const USER_AGENT: &str = "Mozilla/5.0 (Linux x86_64; rv:115.0) Gecko/20100101 Firefox/115.0";

// note that we add our Database as an annotation here so we can easily get it provisioned to us

#[shuttle_runtime::main]

async fn main(

#[shuttle_shared_db::Postgres] db: PgPool

) -> Result<CustomService, shuttle_runtime::Error> {

// automatically attempt to do migrations

// we only create the table if it doesn't exist which prevents data wiping

sqlx::migrate!().run(&db).await.expect("Migrations failed");

// initialise Reqwest client here so we can add it in later on

let ctx = Client::builder().user_agent(USER_AGENT).build().unwrap();

Ok(CustomService { ctx, db })

}

#[shuttle_runtime::async_trait]

impl shuttle_runtime::Service for CustomService {

async fn bind(mut self, _addr: std::net::SocketAddr) -> Result<(), shuttle_runtime::Error> {

scrape(self.ctx, self.db).await.expect("scraping should not finish");

error!("The web scraper loop shouldn't finish!");

Ok(())

}

}

Now that we're done, we can get started web scraping in Rust!

Making our Web Scraper

The first part of making our web scraper is making a request to our target URL so we can grab the response body to process. Thankfully, Amazon's URL syntax is quite simple, so we can easily customise the URL query parameters by adding the name of the search terms we want to look for. Because Amazon returns multiple pages of results, we also want to be able to set our page number as a mutable dynamic variable that will get incremented by 1 every time the request is successful.

// src/main.rs

use chrono::NaiveDate;

#[derive(Clone, Debug)]

struct Product {

name: String,

price: String,

old_price: Option<String>,

link: String,

}

async fn scrape(ctx: Client) -> Result<(), String> {

let mut pagenum = 1;

let mut retry_attempts = 0;

let url = format!("https://www.amazon.com/s?k=raspberry+pi&page={pagenum}");

let res = match ctx.get(url).send().await {

Ok(res) => res,

Err(e) => {

error!("Error while attempting to send HTTP request: {e}");

break

}};

let res = match res.text().await {

Ok(res) => res,

Err(e) => {

error!("Error while attempting to get the HTTP body: {e}");

break

}

};

}

As you may have noticed, we added a variable named retry_attempts. This is because sometimes when we're scraping, Amazon (or any other site for that matter) may give us a 503 Service Unavailable, meaning that the scraping will fail. Sometimes this can be caused by server overload or us scraping too quickly, so we can model our error handling like this:

// src/main.rs

use reqwest::StatusCode;

use std::thread::sleep as std_sleep;

use tokio::time::Duration;

let mut retry_attempts = 0;

if res.status() == StatusCode::SERVICE_UNAVAILABLE {

error!("Amazon returned a 503 at page {pagenum}");

retry_attempts += 1;

if retry_attempts >= 10 {

// take a break if too many retry attempts

error!("It looks like Amazon is blocking us! We will rest for an hour.");

// sleep for an hour then retry on current iteration

std_sleep(Duration::from_secs(3600));

continue;

} else {

std_sleep(Duration::from_secs(15));

continue;

}

}

retry_attempts = 0;

Assuming the HTTP request is successful, we'll get a HTML body that we can parse using scraper.

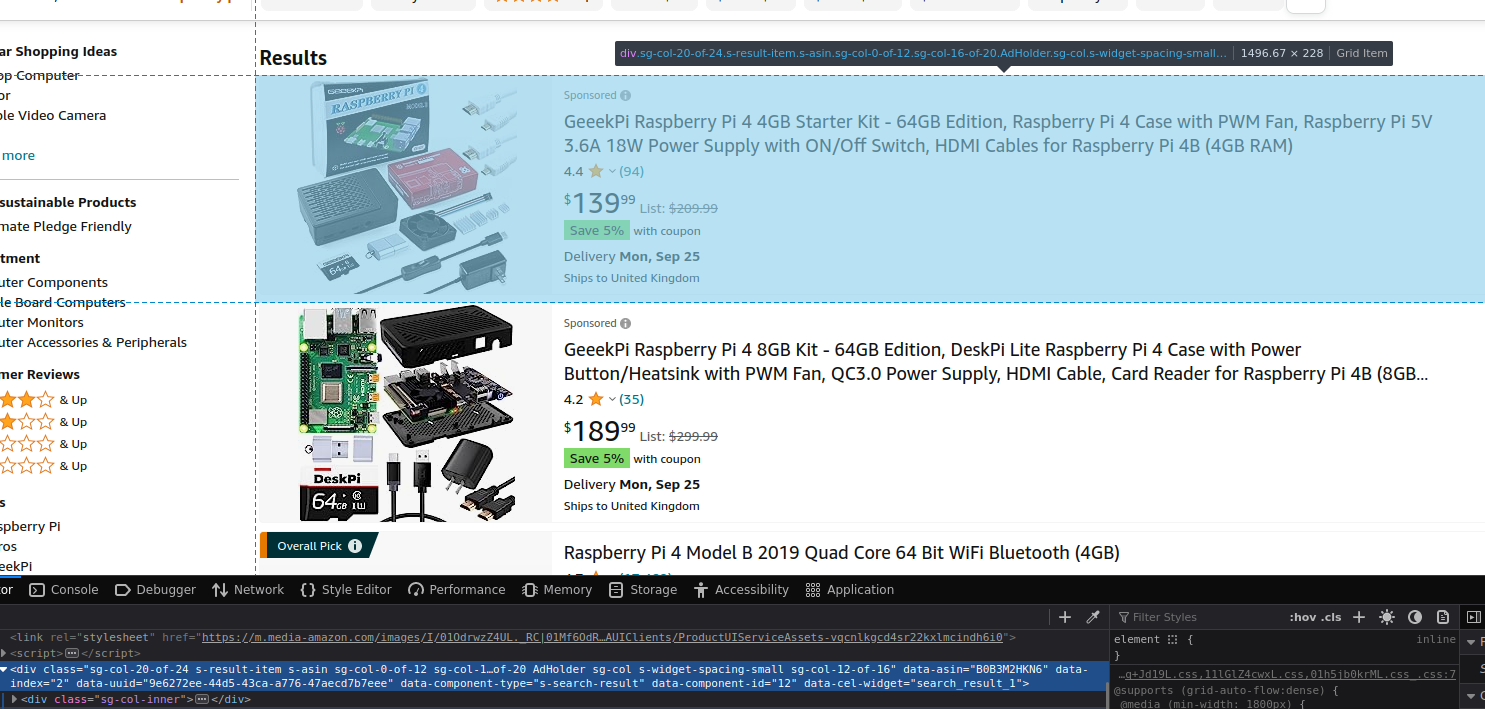

If you go to Amazon in your browser and search for "raspberry pi", you'll then receive a product list. You can examine this product list by using the dev tools function on your browser (in this instance, it's the Inspect function in Firefox but you can also use Chrome Devtools, Microsoft Edge DevTools, etc...). It should look like the following:

You might notice that the div element has a data attribute of data-component-type for which the value of s-search-result. This is helpful for us as no other page components other than the ones we want to scrape have that attribute! Therefore, we can scrape the data by selecting it as a CSS selector (see below for more information). We'll want to make sure we prepare our HTML by parsing it as a HTML fragment, and then we can declare our initial scraper::Selector:

// src/main.rs

use scraper::{Html, Selector};

let html = Html::parse_fragment(&res);

let selector = Selector::parse("div[data-component-type='s-search-result']").unwrap();

As you can see, the Selector uses CSS selectors to be able to parse the HTML. In this case, we are specifically attempting to search for a HTML div element that has a data attribute called "data-component-type" with a value of "s-search-result".

If you attempt to run our program now and html.select(&selector) as per the scraper documentation, you'll see that it returns an iterator over HTML elements. However, because the iteration count can also technically be zero, we'll want to make sure that there are actually things we can iterate over - so let's make sure we cover that point by adding an if statement to check for the iterator count:

// src/main.rs

if html.select(&selector).count() == 0 {

error!("There's nothing to parse here!");

break

};

In our final iteration of the app, this should just break the loop as this will normally signal that there's no more products we can retrieve as in the first case there should always be product results.

Now that we've done our respective error handling, we can iterate through the entries and create a Product, then append it to our vector of Products.

// src/main.rs

for entry in html.select(&selector) {

// declaring more Selectors to use on each entry

let price_selector = Selector::parse("span.a-price > span.a-offscreen").unwrap();

let productname_selector = Selector::parse("h2 > a").unwrap();

let name = entry.select(&productname_selector).next().expect("Couldn't find the product name").text.next().unwrap().to_string();

// Amazon products can have two prices: a current price, and an "old price". We iterate through both of these and map them to a Vec<String>.

let price_text = entry.select(&price_selector).map(|x| x.text().next().unwrap().to_string()).collect::<Vec<String>>();

// get local date from chrono for database storage purposes

let scraped_at = Local::now().date_naive();

// here we find the anchor element and find the value of the href attribute - this should always exist so we can safely unwrap

let link = entry.select(&productname_selector).map(|link| {format!("https://amazon.co.uk{}", link.value().attr("href").unwrap())}).collect::<String>();

vec.push(Product {

name,

price: price_text[0].clone(),

old_price: Some(price_text[1].clone()),

link,

scraped_at,

});

}

pagenum += 1;

std_sleep(Duration::from_secs(20));

Note that in the above codeblock we use sleep from the standard library - if we attempt to use tokio::time::sleep, the compiler returns an error about holding a non-Send future across an await point.

Now that we've written our code for processing the data we've gathered from the web page, we can wrap what we've written so far in a loop, moving our Vec<Product> and pagenum declarations to an outer loop that will run infinitely. Next, we'll want to make sure we have somewhere to save our data! We'll want to use a batched transaction here, which thankfully we can do by using db.begin and db.commit. Check the code out below:

// src/main.rs

let transaction = db.begin().await.unwrap();

for product in vec {

if let Err(e) = sqlx::query("INSERT INTO

products

(name, price, old_price, link, scraped_at)

VALUES

($1, $2, $3, $4, $5)

")

.bind(product.name)

.bind(product.price)

.bind(product.old_price)

.bind(product.link)

.bind(product.scraped_at)

.execute(&db)

.await

.unwrap() {

error!("There was an error: {e}");

error!("This web scraper will now shut down.");

transaction.rollback().await.unwrap();

break

}

}

transaction.commit().await.unwrap();

All we're doing here is just running a for loop over the list of scraped products and inserting them all into the database, then committing at the end to finalise it.

Now ideally we'll want the scraper to rest for some time so that the pages are given time to update - otherwise, if you comb the pages all the time you will more than likely end up with a huge amount of duplicate data. Let's say we wanted to wanted it to rest until midnight:

// src/main.rs

use tokio::time::{sleep as tokio_sleep, Duration};

// get the local time, add a day then get the NaiveDate and set a time of 00:00 to it

let tomorrow_midnight = Local::now()

.checked_add_days(Days::new(1))

.unwrap()

.date_naive()

.and_hms_opt(0, 0, 0)

.unwrap();

// get the local time now

let now = Local::now().naive_local();

// check the amount of time between now and midnight tomorrow

let duration_to_midnight = tomorrow_midnight.signed_duration_since(now).to_std().unwrap();

// sleep for the required time

tokio_sleep(Duration::from_secs(duration_to_midnight.as_secs())).await;

Now we're pretty much done!

Your final scraping function should look like this:

// src/main.rs

async fn scrape(ctx: Client, db: PgPool) -> Result<(), String> {

debug!("Starting scraper...");

loop {

let mut vec: Vec<Product> = Vec::new();

let mut pagenum = 1;

let mut retry_attempts = 0;

loop {

let url = format!("https://www.amazon.com/s?k=raspberry+pi&page={pagenum}");

let res = match ctx.get(url).send().await {

Ok(res) => res,

Err(e) => {

error!("Something went wrong while fetching from url: {e}");

StdSleep(StdDuration::from_secs(15));

continue;

}

};

if res.status() == StatusCode::SERVICE_UNAVAILABLE {

error!("Amazon returned a 503 at page {pagenum}");

retry_attempts += 1;

if retry_attempts >= 10 {

error!("It looks like Amazon is blocking us! We will rest for an hour.");

StdSleep(StdDuration::from_secs(3600));

continue;

} else {

StdSleep(StdDuration::from_secs(15));

continue;

}

}

let body = match res.text().await {

Ok(res) => res,

Err(e) => {

error!("Something went wrong while turning data to text: {e}");

StdSleep(StdDuration::from_secs(15));

continue;

}

};

debug!("Page {pagenum} was scraped");

let html = Html::parse_fragment(&body);

let selector =

Selector::parse("div[data-component-type= ' s-search-result ' ]").unwrap();

if html.select(&selector).count() == 0 {

break;

};

for entry in html.select(&selector) {

let price_selector = Selector::parse("span.a-price > span.a-offscreen").unwrap();

let productname_selector = Selector::parse("h2 > a").unwrap();

let price_text = entry

.select(&price_selector)

.map(|x| x.text().next().unwrap().to_string())

.collect::<Vec<String>>();

vec.push(Product {

name: entry

.select(&productname_selector)

.next()

.expect("Couldn't find the product name!")

.text()

.next()

.unwrap()

.to_string(),

price: price_text[0].clone(),

old_price: Some(price_text[1].clone()),

link: entry

.select(&productname_selector)

.map(|link| {

format!("https://amazon.co.uk{}", link.value().attr("href").unwrap())

})

.collect::<String>(),

});

}

pagenum += 1;

retry_attempts = 0;

StdSleep(StdDuration::from_secs(15));

}

let transaction = db.begin().await.unwrap();

for product in vec {

if let Err(e) = sqlx::query(

"INSERT INTO products

(name, price, old_price, link, scraped_at)

VALUES

($1, $2, $3, $4, $5)"

)

.bind(product.name)

.bind(product.price)

.bind(product.old_price)

.bind(product.link)

.execute(&db)

.await

{

error!("There was an error: {e}");

error!("This web scraper will now shut down.");

break;

}

}

transaction.commit().await.unwrap();

// get the local time, add a day then get the NaiveDate and set a time of 00:00 to it

let tomorrow_midnight = Local::now()

.checked_add_days(Days::new(1))

.unwrap()

.date_naive()

.and_hms_opt(0, 0, 0)

.unwrap();

// get the local time now

let now = Local::now().naive_local();

// check the amount of time between now and midnight tomorrow

let duration_to_midnight = tomorrow_midnight

.signed_duration_since(now)

.to_std()

.unwrap();

// sleep for the required time

TokioSleep(TokioDuration::from_secs(duration_to_midnight.as_secs())).await;

}

Ok(())

}

And we're done!

Deploying

If you initialised your project on the Shuttle servers, you can get started by using cargo shuttle deploy (adding --allow-dirty if on a dirty Git branch). If not, you'll want to use cargo shuttle project start --idle-minutes 0 to get your project up and running.

Finishing Up

Thanks for reading this article! I hope you have a more thorough understanding of how to start web scraping in Rust, using the Rust Reqwest and scraper crates.

Ways to extend this article:

- Add a frontend so you can show stats for your scraper bot

- Add a proxy for your web scraper

- Scrape more than one website